Safety Assurance of Predictive Systems

Case Study: While long lines and frantically shuffling luggage into plastic bins isn’t a fun experience, airport security is a critical and necessary requirement for safe travel.

No one understands the need for both thorough security screenings and short wait times more than U.S. Transportation Security Administration (TSA). They’re responsible for all U.S. airport security, screening more than two million passengers daily.

As part of their Apex Screening at Speed Program, DHS has identified high false alarm rates as creating significant bottlenecks at the airport checkpoints. Whenever TSA’s sensors and algorithms predict a potential threat, TSA staff needs to engage in a secondary, manual screening process that slows everything down. And as the number of travelers increase every year and new threats develop, their prediction algorithms need to continually improve to meet the increased demand.

The challenge is hosted in Kaggle by Department of Homeland Security which offered the largest prize pool in Kaggle’s history ($1.5 million) 1. The challenge is to reduce high false-alarm that create bottlenecks at airport systems. We aim to analyze the abstractions of the ML-enabled system to infer precondition that provide probable guarantees of safety of the system.

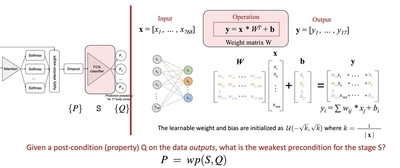

Take the following solution of the challenge as an example. We analyze the source-code and look at the following abstraction of the system, where some low-level details are abstracted away 2.

We propose the weakest precondition reasoning on the above abstraction to infer preconditions. Specifically, we ask that, for a given post-condition of a component in the pipeline, what is the weakest precondition? Starting with the final stage in the pipeline, can we infer the weakest pre-condition and propagate that backward? Below is a one-step reasoning on the above pipeline to infer precondition of the correctness.

Then we aim to propagate the preconditions as much as possible, towards the inputs of the model. Thus, we can provide early signals and estimation of uncertainty. This will enable safety of the deep learning model by specifying false-negatives, which can bring severe safety risks.