Verifying Neural Networks for Individual Fairness

The availability of powerful computational resources and high volume of data has enabled the success of DNN in many applications. Although testing and verifying fairness of ML classifiers e.g., decision tree, support vector machine, received much attention recently, verification of DNN is hard because of the non-linear computational nodes in the network. We proposed Fairify that verifies individual fairness for DNNs in productions, which is recently published in ICSE 2023. The individual fairness property ensures that any two individuals with similar attributes except the protected attribute(s) should receive similar prediction.

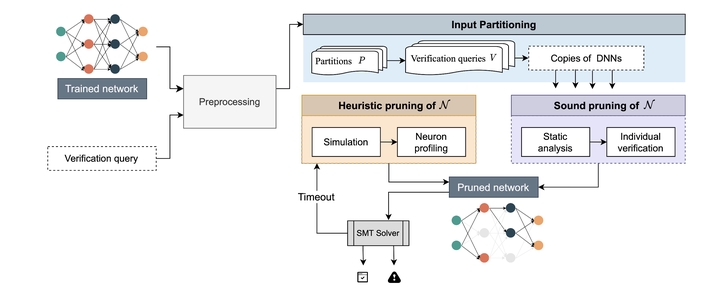

This property is extensively used in fairness testing literature. One reason for that is – group fairness metrics can not capture unfairness when there is same discrimination for two different groups. But individual fairness measures worst-case situations. This property is challenging to verify because we have to check globally, compared to the adversarial robustness property where we are given a nominal input. I transferred the property as fairness pre- and post-condition of the DNN and then used SMT based technique to provide certification or counterexample. The verification has been made tractable in three steps:

- Input partitioning

- Sound neural pruning

- Heuristic-based neural pruning.

The key idea behind the technique is that many neurons always remain inactive and hence do not impact decision-making, when we consider only a smaller part of the input domain. Therefore, Fairify divides the input space into smaller partitions and assigns a copy of DNN to each partition. Then Fairify employs interval arithmetic and layer-wise verification on each neuron to perform sound neural pruning. When the sound pruning is not sufficient, Fairify applies the heuristics of neural activation to prune further.

The technique is scalable to real-world DNNs as well as to the relaxed version of fairness queries. Fairify can provide partial certification and locate fairness violations with counterexample, which would make fairness repair explainable and interactive.